Q-Learning

The Actor-Critic algorithm fits 2 function approximators: one for the policy, and one for the value function. A key problem with the policy gradient method is the high variance in the gradient update. Can we omit Policy Gradients completely?

Key Ideas

- If we have a policy

- We can compute the gradient to increase the probability of good

actions

- It can be shown that in a fully-observed MDP, there is a policy that is both deterministic and optimal

Policy Iteration

- evaluate

- set

Value Iteration

Skips the explicit policy representation altogether. Key idea: argmax of advantage function is the same as argmax of Q-function.

- set

- set

This simplifies the dynamic programming problem in policy iteration.

Fitted Value Iteration

We can use any most function approximators to represent

- set

- set

This algorithm suffers from the curse of dimensionality. When the state space is large, the algorithm is computationally expensive. To get around this, we may sample states instead, with little change to the algorithm.

Fitted Q Iteration

Notice the

- set

- set

There is still a “max” hiding in

- Collect dataset

This works, even for off-policy samples (unlike Actor-Critic). In addition, there is only one network, hence no high-variance policy gradient methods. However, there are no convergence guarantees with non-linear function approximators!

Why is it off-policy? The algorithm doesn’t assume anything about the

policy: given

In step 1.3, if the algorithm is off-policy, why would we ever need to go back to collect more samples in step 1? This is because on a random, poorly performing policy, we might not access some interesting states, that we would get after learning a better policy through Q-iteration.

Exploration in Q-learning

The policy used in Q-learning is deterministic. To get around this, we use a different, stochastic policy in step 1, when sampling actions to take. An example of such a policy is the epsilon-greedy, or Boltzmann exploration policy.

Non-Tabular Value Function Learning

In the tabular case, we have a Bellman contraction

When we do fitted value iteration, we have another contraction

However,

Q-learning is not gradient descent!

There are several problems with the regular Q-iteration algorithm:

- Samples are temporally correlated

- Target values are always changing

- There is a no gradient through the target value, even though it seems we are doing a single gradient update step.

- Single-sample updates

With 1 and 2, it’s possible to repeatedly overfit to the current sample.

Dealing with correlated samples

We can follow the same technique from actor-critic (synchronous/asynchronous parallel Q-learning) to alleviate correlated samples. The samples are however still temporally correlated. A better solution is to use a replay buffer.

Replay buffer

We have a buffer

Dealing with the moving target

In the online Q-learning algorithm, the target Q moves. To resolve this we can use a target network:

The use of the target network

DQN

DQN is the result of using a replay buffer, target network and some gradient clipping. See Playing Atari with Deep RL.

Double DQN

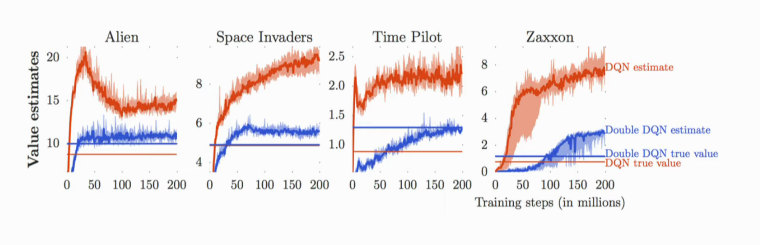

Figure 1: The predicted Q-values are much higher than the true Q-values

It has been shown imperatively that the learnt Q-values are numerically much higher than the true Q-values. Practically, this isn’t much of an issue: as the predicted Q-value increases, performance also increases.

The intuition behind why this happens, is that our target value

It is easy to show that:

becomes:

To get 2 Q-functions, we use the current and target networks:

Q-learning with stochastic optimization

Taking max over a continuous action space can be expensive. A simple approximation is:

where

Another option is to use a function class that is easy to maximize (e.g. using a quadratic function). This option is simple, but loses representational power.

The final option is to learn an approximate maximizer (e.g. DDPG). The

idea is to train another network

Q-learning

Q-learning learns an action-utility representation instead of learning

utilities. We will use the notation

A TD agent that learns a Q-function does not need a model of the form

However, this equation requires a model to be learnt, since it depends

on

The updated equation for TD Q-learning is:

which is calculated whenever action

Q-learning has a close relative called SARSA (State-Action-Reward-State-Action). The update rule for SARSA is as follows:

where

Whereas Q-learning backs up the best Q-value from the state reached in the observed transition, SARSA waits until an action is actually taken and backs up the Q-value for that action. For a greedy agent that always takes the action with best Q-value, the two algorithms are identical. When exploration is happening, they differ significanty.

Because Q-learning uses the best Q-value, it pays no attention to the actual policy being followed - it is an off-policy learning algorithm. However, SARSA is an on-policy algorithm.

Q-learning is more flexible in the sense that a Q-learning agent can learn how to behave well even when guided by a random or adversarial exploration policy. On the other hand, SARSA is more realistic: for example if the overall policy is even partly controlled by other agents, it is better to learn a Q-function for what will actually happen rather than what the agent would like to happen.

Q-learning has been shown to be sample efficient in the tabular setting (Jin et al., n.d.).

Q-learning with function approximation

To generalize over states and actions, parameterize Q with a function approximator, e.g. a neural net:

and turn this into an optimization problem minimizing the loss on the TD error:

The key problem with Q-learning is stability, coined the “deadly triad”.

- Off-policy learning

- flexible function approximation

- Bootstrapping

In the presence of all three, learning is unstable. DQN is the first algorithm that stabilized deep Q-learning (Playing Atari with Deep RL).

Bibliography

Jin, Chi, Zeyuan Allen-Zhu, Sebastien Bubeck, and Michael I Jordan. n.d. “Is Q-Learning Provably Efficient?” In Advances in Neural Information Processing Systems 31, edited by S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, 4863–73. Curran Associates, Inc. http://papers.nips.cc/paper/7735-is-q-learning-provably-efficient.pdf.