Actor-Critic

Actor-Critic improves on Policy Gradients methods by introducing a critic.

Recall the objective:

The question we want to address is: can we get a better estimate of the reward-to-go?

Originally, we were using the single-trajectory estimate of the reward-to-go. If we knew the true expected reward-to-go, then we would have a lower variance version of the policy gradient.

We define the advantage function as

Value Function Fitting

Recall:

We can choose to fit

We can write:

Classic actor-critic algorithms fit

We do Monte Carlo evaluation with function approximation, estimating

Our training data consists of

Alternatively, we can decompose the ideal target, and use the old

This is a biased estimate, but might have much lower variance. This works when the policy does not change much and the previous value function is a decent estimate. Since it is using a the previous value function, it is also called a bootstrapped estimate.

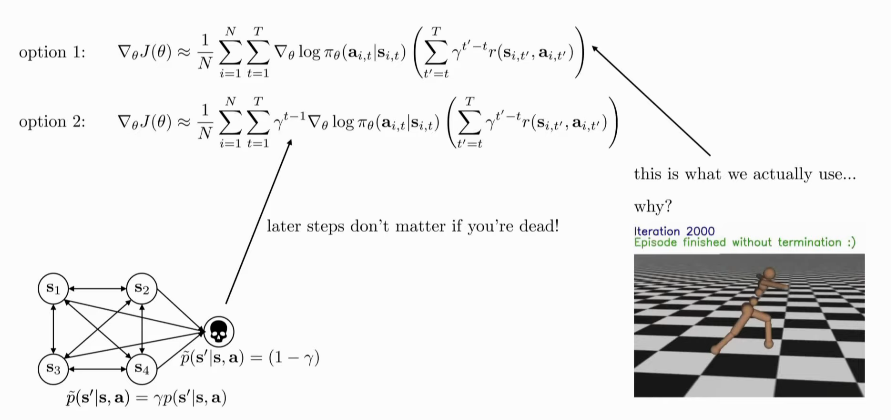

Discount Factors

The problem with the bootstrapped estimate is that with long horizon

problems,

where

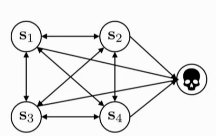

We can think of

Figure 1:

We can then modify

Algorithm

- sample

- Fit

- Evaluate

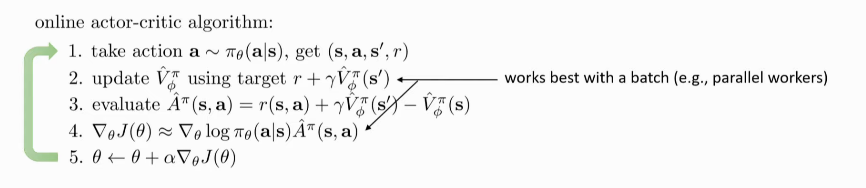

Online Actor-critic

online actor-critic uses a single sample batch, which is a bad idea in

large neural networks. We need to use multiple samples to perform

updates.

online actor-critic uses a single sample batch, which is a bad idea in

large neural networks. We need to use multiple samples to perform

updates.

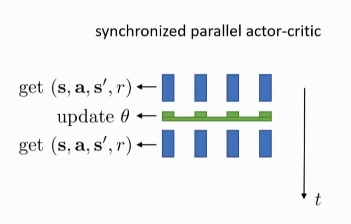

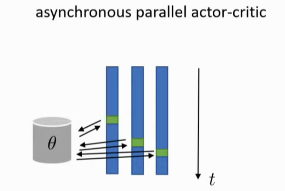

The purpose of multiple workers here is not to make the algorithm faster, but to make it work by increasing the batch size.

Generalized Advantage Estimation

is some weighted combination of n-step returns. If we choose

where

the role of

Need to balance between learning speed, stability.

- Conservative Policy Iteration (CPI)

- propose surrogate objective, guarantee monotonic improvement under specific state distribution

- Trust Region Policy Optimization (TRPO)

- approximates CPI with trust region constraint

- Proximal Policy Optimization (PPO)

- replaces TRPO constraint with RL penalty + clipping (computationally efficient)

- Soft Actor-Critic (SAC)

- stabilize learning by jointly maximizing expected reward and policy entropy (based on maximum entropy RL)

- Optimistic Actor Critic (OAC)

- Focus on exploration in deep Actor critic approaches.

- Key insight: existing approaches tend to explore conservatively

- Key result: Optimistic exploration leads to efficient, stable learning in modern Actor Critic methods