Policy Gradients

Key Idea

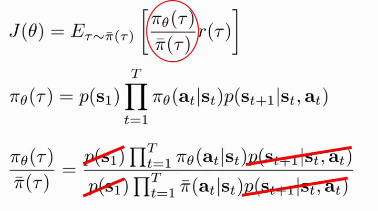

The objective is:

To evaluate the objective, we need to estimate this expectation, often through sampling by generating multiple samples from the distribution:

Recall that:

This makes the good stuff more likely, and bad stuff less likely, but scaled by the rewards.

Comparison to Maximum Likelihood

- policy gradient

- maximum likelihood

Partial Observability

The policy gradient method does not assume that the system follows the

Markovian Assumption! The algorithm only requires the ability to

generate samples, and a function approximator for

Issues

- Policy gradients have high variance: the gradient is noisy, easily affected by a constant change in rewards

Properties of Policy gradients

- On-policy

The objective is an expectation under trajectories sampled under that policy. This can be tweaked into an off-policy method using Importance Sampling.

Figure 1: Off-policy policy gradients

Problem: with large T the first term becomes extremely big or small.

Variance Reduction

Causality

The policy at time

This is still an unbiased estimator, and has lower variance because the gradients are multiplied by smaller values. This is often written as:

where

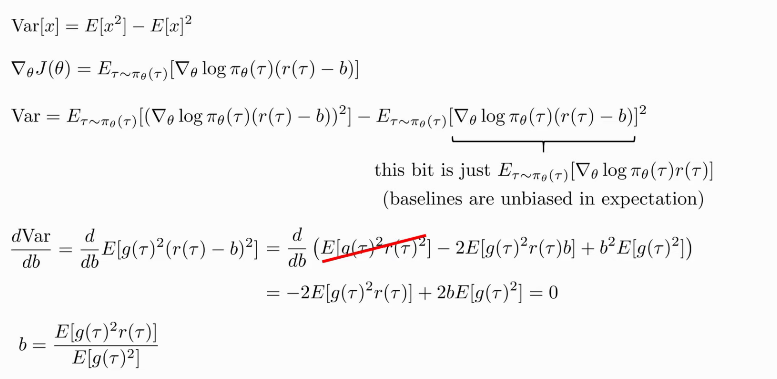

Baseline Reduction

Subtracting a baseline is unbiased in expectation, but may reduce the

variance. We can compute the optimal baseline, by evaluating the

variance of the gradient, and setting the derivative of the variance

with respect to

Figure 2: Computing the optimal baseline

This is just expected reward, but weighted by gradient magnitudes.

Policy Gradient in practice

- Gradients have high variance

- Consider using much larger batches

- Tweaking learning rates might be important

- Adaptive learning rates are fine, there are some policy-gradient oriented learning rate adjustment methods

REINFORCE

- For each episode,

- generate

- For each step

- Advantage function tells you how relatively good this action is

- $θ =

- generate

Objective:

Actor critics use learned estimate (e.g. $\hat{A}(s, a) = \hat{Q}(s,

-

- \hat{V}(s).)

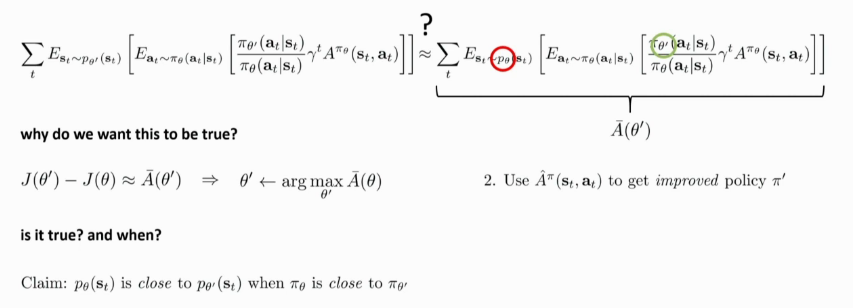

Policy Gradients an Policy Iteration

Policy gradients involves estimating

We have an expectation under

We can bound the distribution change from

We can measure the distribution mismatch with KL divergence.

Then, we can enforce the constraint of a small KL divergence by using a loss function with the Lagrange Multiplier:

- Maximize

Intuition: raise

Alternatively, optimize within some region, and use a Taylor expansion to approximate the function within that region.