Linear Algebra

Definitions

A matrix of dimensions M-by-N looks like:

Its transpose flips the dimensions. In the below example transposing a N-by-1 matrix makes it a 1-by-N matrix.

The magnitude of a vector is given by

Linear Independence

A set of vectors

Span

The “span” of

Basis

The basis of a vector space is a set of linearly independent vectors that span the full space.

If

Special Matrices

- diagonal matrix

- a matrix with all off-diagonal entries equal to 0.

- identity matrix

- A diagonal matrix with diagonal entries equal to 1.

- symmetric matrix

- skew symmetric matrix

Matrix properties

Solutions to linear systems

Given the matrix

If

Matrices as Linear Transformations

A transformation is linear if it fulfills two properties:

- All lines remain lines (they don’t get curved)

- The origin is fixed.

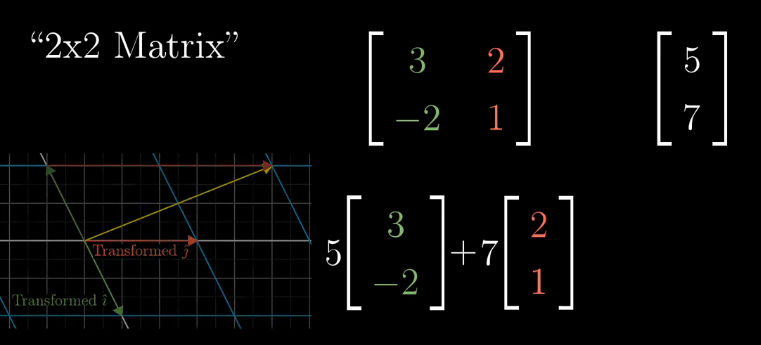

Under a linear transformation, grid lines remain parallel and evenly spaced. This property allows us to compute the transformed vector, only by recording how the basis vectors are transformed.

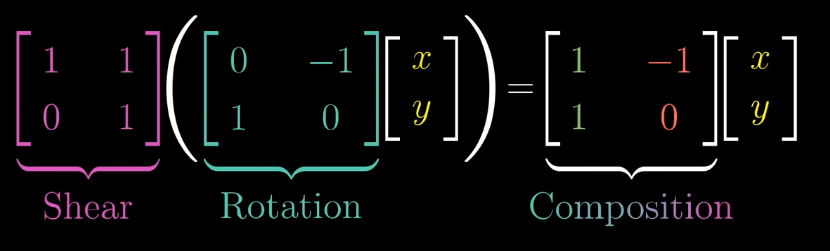

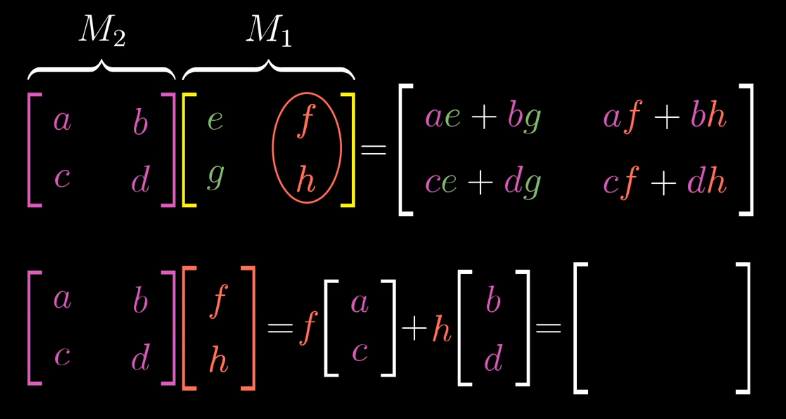

Matrix Multiplication as Composition

Often, we want to describe the effects of multiple linear transformations composed together, for example, a rotation, followed by a shear. The composition of linear transformations is also a linear transformation, and can be described with a single matrix (see above).

Hence, we can think about matrix multiplication as computing where the final basis vectors land.

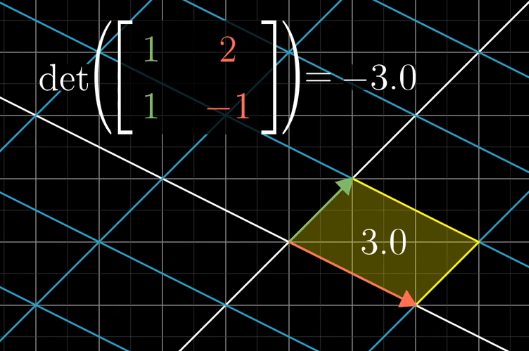

Determinant

The fact that linear transformations leave grid lines parallel and evenly spaced, means that the area of each unit square is scaled by the same amount.

The determinant of a transformation is the amount of scaling of area of a unit square. If the determinant is negative, then the orientation of the resulting grid space is reversed.

In 3D space, the determinant is the volume of the parallelpiped.

\begin{equation}

det \left(

- b \cdot det \left(

- c \cdot det \left(

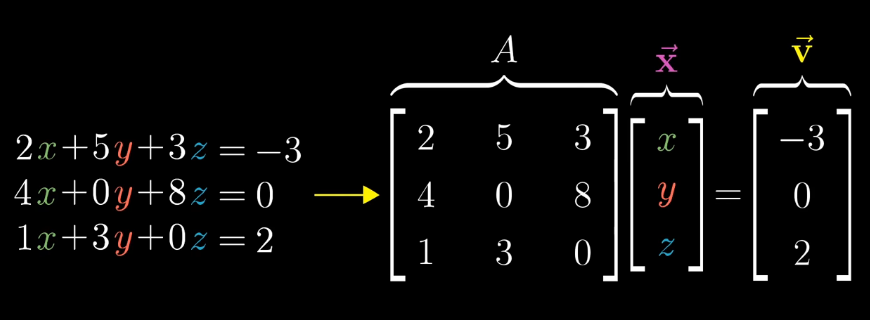

Matrices for solving linear equations

Suppose we want to compute

Suppose the determinant of the transformation is 0. Then we know that it does not have an inverse. However, solutions can still exist.

Rank

The rank is the number of dimensions of the output of the

transformation. It is easy to see that the maximum rank of the

transformation is the original dimensions of the matrix. Rank

corresponds to the maximal number of linearly independent columns of

Column Space

The column space of the matrix

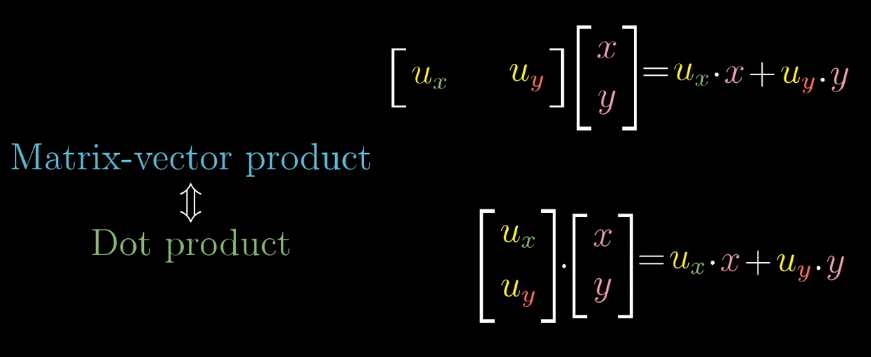

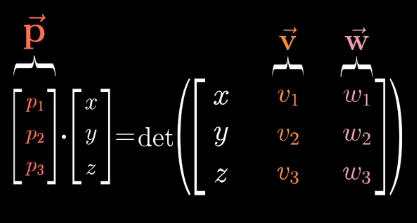

Dot Product

The dot product

We can think of

Hence,

sometimes, this is also notated as

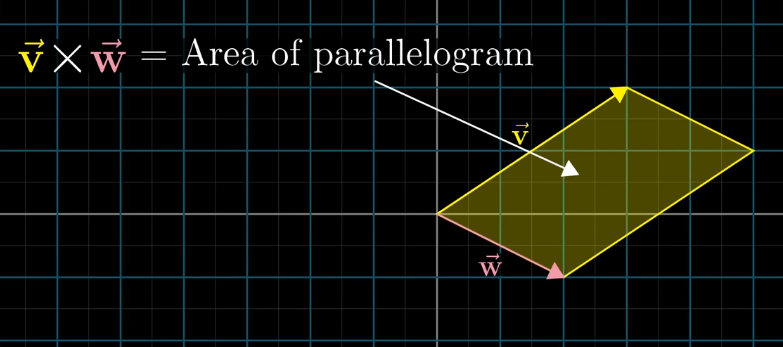

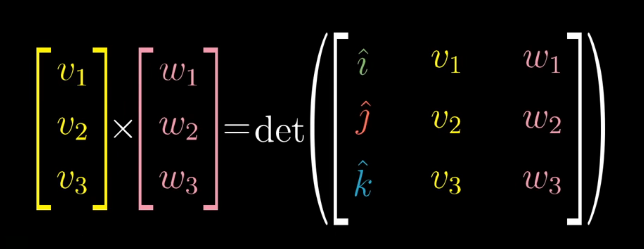

Cross Product

The cross product of

We want to find the dual vector

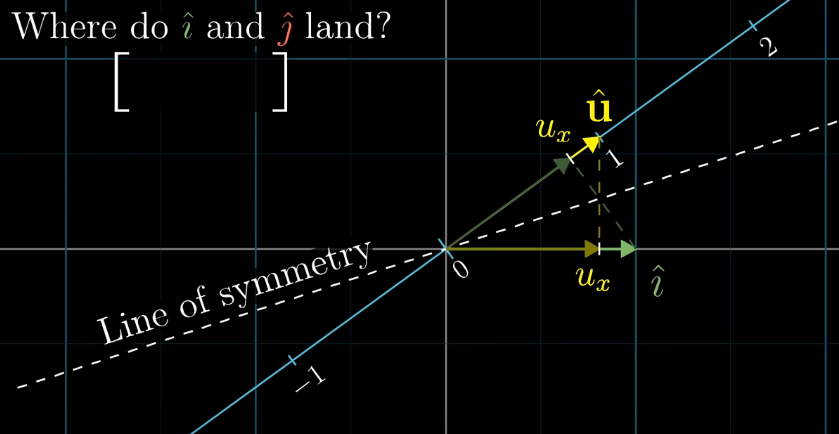

How to translate a matrix

Suppose someone uses a different coordinate system (i.e. different basis vectors), which we can represent with a matrix:

Suppose then that we want to apply a linear transformation to a vector in her coordinate system. In the case of a rotation 90 degrees anti-clockwise, it would be represented in a matrix as:

In the “default” basis vector coordinate system. What does this

transformation look like in the new coordinate system? Given some

vector

Then, we apply the transformation to the vector in the default language:

Then, we apply to the inverse of the change in basis matrix, to return the vector to the other language:

This form

Eigenvectors and eigenvalues

Consider the span of a particular vector, that is, the set of vectors obtainable by applying a scaling constant to it. Some vectors remain on their own span, even with linear transformations.

These vectors are called eigenvectors, and the value of the scaling constant is called the eigenvalue. Mathematically, this is expressed as:

Consider a 3D rotation. If we can find an eigenvector for this 3D transformation, then we have found the axis of rotation.

Eigenvectors corresponding to different eigenvalues are linearly independent.

Eigenvectors of a real symmetric matrix are orthogonal and real.

Null Space

The null space of a matrix

Singular Value Decomposition

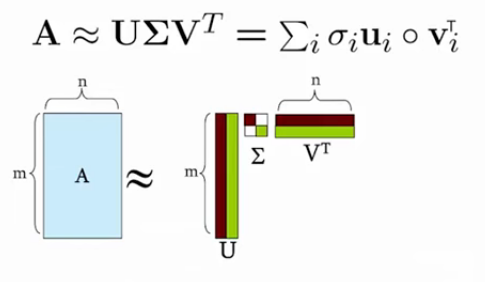

Given an input data matrix

It is always possible to decompose a real matrix

The entries on the diagonal of

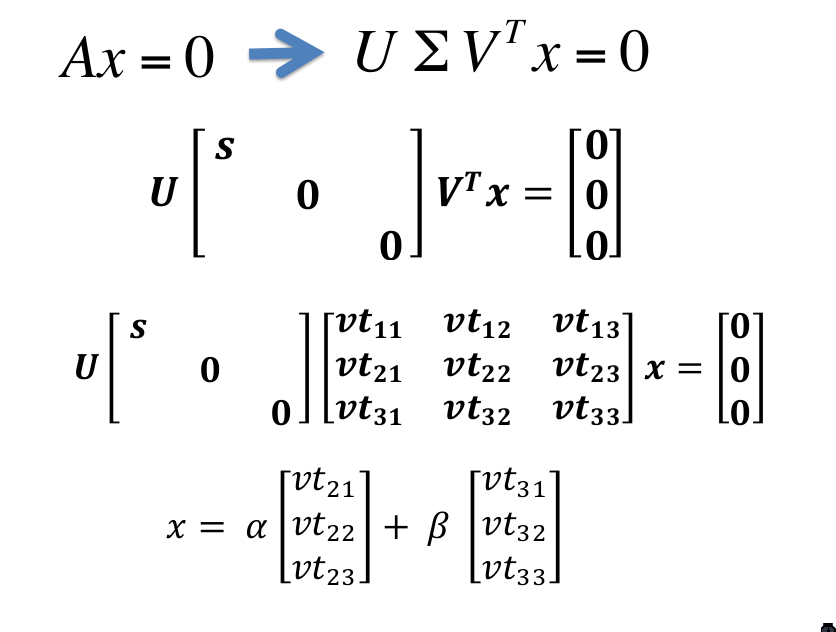

SVD can be used to solve linear equations of the form

If A is full-rank, then