Model-Based Reinforcement Learning

In model-free Reinforcement Learning ⭐, we assumed that

Model-based Reinforcement learning is about learning system dynamics, and using the learnt system to make decisions.

In the stochastic open-loop case,

and we’d want to choose:

In a closed-loop setting, the process by which the agent performs actions receives feedback. Planning in the open-loop case can go extremely awry.

In the stochastic closed-loop case,

where

Simple Model-based RL

The most basic algorithm does this:

- Run base policy to obtain tuples

- Use supervised learning on the tuples to learn the dynamics

- Use the learnt model for control

Problem: distributional shift. We learn from some base distribution

Performance gap in model-based RL

Model-based RL tends to plateau in performance much earlier than model-free RL. This is because it needs to use a function approximator that:

- Does not overfit a low amount of samples

- But also is expressive enough

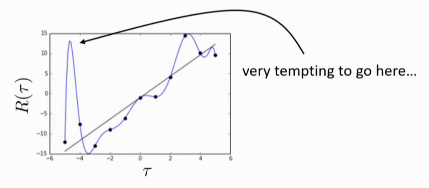

And this turns out to be hard. Below, the model is overfitted, and the planner might want to exploit going to this non-existent peak, and result in nonsensical behaviour.

We can use uncertainty estimation to detect where the models may be

wrong, for example by using Gaussian Processes.

We can use uncertainty estimation to detect where the models may be

wrong, for example by using Gaussian Processes.

For planning under uncertainty, one can use the expected value, optimistic value, or pessimistic value, depending on application.

How can we have uncertainty-aware models?

Use output entropy: high entropy means model is uncertain.- But model might overfit and be confident, but wrong!

- Estimate model uncertainty: the model is certain about the data,

but we are not certain about the model.

- estimate

- estimate

- Or use Bayesian NN, Bootstrap ensembles

Problems with model-based RL

- High dimensionality

- Redundancy

Partial observability

Latent space models: separately learn