Interval Estimation in Bayesian Statistics

Suppose instead of point estimation, we’d like to identify a

region that is likely to contain the true value of parameter

A

Interpreting credible sets is different in Bayesian statistics, compared to frequentist confidence intervals.

In Bayesian statistics, the unknown parameters

The probability that

lies in given observed data is .

In frequentist statistics,

If we could recompute

for a large number of datasets collected the same way, then about of them will contain the true value of

Another way to view this is that frequentist and Bayesian notions of coverage describe pre- and post-experimental coverage respectively. Researchers have shown that Bayesian credible sets constructed via some methods will also have almost the correct frequentist coverage.

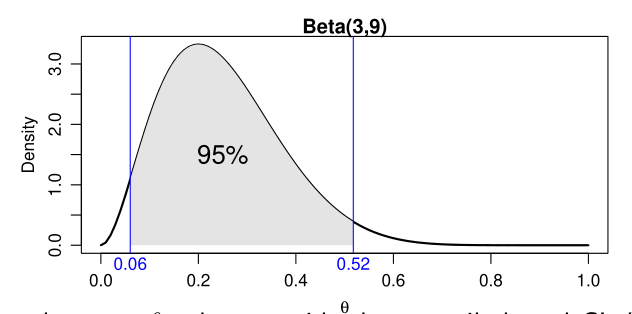

Quantile/equal-tails intervals

We find two numbers

The

Figure 1: Quantile-based 95% CI for Beta(3,9)

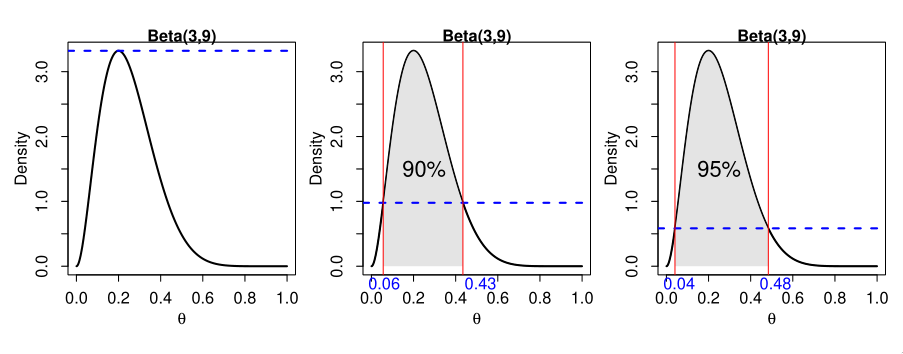

Highest Posterior Density (HPD) region

The HPD credible set is defined as the set:

where

All points in a HPD region have higher posterior density than points outside the region.

To visualize this, imagine drawing a horizontal line across the graph

at the mode of the posterior distribution, and the pushing it down

until the corresponding values on the

Figure 2: 90% and 95% HPD regions on a Beta(3,9) distribution

Computing HPD requires numerical methods. HPD might not be an interval

if the distribution is multimodal. Some packages like coda assumes

that the distribution is not severely multimodal.

Generally, the quantile-based CI will be equal to the HPD region if the posterior is symmetric and uni-modal, but wider otherwise. For unimodal posterior densities, the HPD interval has the shortest length for the same level of coverage.