Deep Learning With Bayesian Principles - Emtiyaz Khan

- link

- Mohammad Emtiyaz Khan | Deep Learning with Bayesian Principles · SlidesLive

- speaker

- Emtiyaz Khan

- tags

- Bayesian Deep Learning, NeurIPS

Goals

- To understand the fundamental principles of learning from data

- use them to develop learning algorithms

Deep learning assumes that the environment is stationary: e.g. all the information required to classify images is already available in large amounts, and these don’t change quickly over time.

Wish to design life-long learning systems: Bayesian learning has a natural update rule to update posterior beliefs given new data.

| Bayes | DL | |

|---|---|---|

| Can handle large data and complex models? | ❌ | ✔️ |

| Scalable training? | ❌ | ✔️ |

| Can estimate uncertainty? | ✔️ | ❌ |

| Can perform sequential/incremental learning? | ✔️ | ❌ |

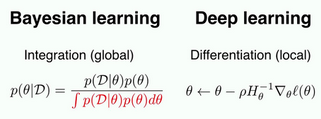

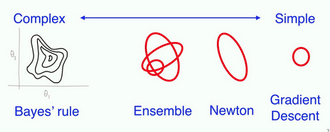

We start with the Bayes rule, and derive many existing algorithms through various approximations.

Deep Learning

Frequentist: Empirical Risk Minimization (ERM) or Maximum Likelihood

\begin{equation} \mathrm{min}_{\theta} l(D, \theta) = \sum_{i=1}^{N} [y_i - f_{\theta}(x_i)^2]

- \gamma \theta^T \theta \end{equation}

Scales well to large data and complex models, good performance in practice. Does not tell you what the model does not know (uncertainty).

Bayesian Principles

- Sample

- Score

- Normalize:

This is a global method, integrating over all models, but does not scale to large problems. Global properties enable sequential update.

To scale Bayesian learning, we need to approximate the posterior distribution. Different posterior approximation methods lead to different learning rules.

Bayesian Learning Rule

- Deep learning rule:

- Bayesian learning rule:

Bayesian learning rule optimizes over all distributions.

- Deep learning algo:

- Bayes learning rule:

Deep learning algorithms are obtained by choosing an appropriate

approximation

Deriving Gradient Descent

Gradient descent is derived from using a Gaussian with fixed

covariance, and approximating