Artificial Intelligence

What is Artificial Intelligence?

Designing agents that act rationally (e.g. through maximising a reward function).

Humans often act in ways that do not maximise their own benefit (irrational).

Acting Humanly: Turing Test

A computer would require:

- natural language processing

- knowledge representation

- automated reasoning

- machine learning

Thinking Humanly

Cognitive science brings together computer models and experimental techniques in psychology to construct testable and provable theories of the human mind.

Thinking rationally

Taking informal knowledge and expressing it in logical terms.

Acting Rationally

A rational agent is one that acts so as to achieve the best outcome or,when there is uncertainty, the best expected outcome.

An agent is a function from percept histories to actions, i.e.

Intelligent Agents

- Agents perceive the environment through sensors

- Agents act upon the environment through actuators

Rational Agents

- For each possible percept sequence, select an action that is expected to maximise its performance measure. The performance measure is a function of a given sequence of environment states.

- Given the evidence provided by the percept sequence and whatever built-in knowledge the agent has.

- Agents can perform actions that help them gather useful information (exploration)

- An agent is autonomous if its behaviour is determined by its own experience (with ability to learn and adapt)

Exploration vs Exploitation

Doing actions that modify future percepts (information gathering) is an important part of rationality. In most scenarios, agents don’t know the entire environment a priori.

Specifying Task Environment (PEAS)

- Performance measure

- Environment

- Actuators

- Sensors

Properties of Task Environments

- Fully observable

- whether an agent’s sensors gives it access to the complete state of the environment at any given point in time

- Deterministic

- if the next state is completely determined by the current environment. Otherwise it is stochastic.

- Episodic

- whether an agents experience is divided into atomic episodes. In each episode, an agent receives a percept and performs a single action. In sequential environments short-term actions can have long-term consequences. For this reason, episodic environments are generally simpler.

- Static

- whether the environment can change while the agent is deliberating.

- Discrete

- whether the state of the environment, how time is handled, and the percepts and actions of the agent discretely quantized.

- Single agent

- in some environments, for example chess, there are

multiple agents acting in the same environment.

- cooperative: if the two agents need to work together.

- competitive: if the two agents are working against each other.

- Known

- whether the agent knows the outcome of its actions.

Table-Driven Agent

Simple to implement, and works. However, the number of table entries

is exponential in time:

Reflex agents

A simple reflex agent is one that selects actions on the basis of the current percept, ignoring the rest of the percept history. A condition-action rule is triggered upon processing the current percept. E.g. if the car in front is braking, then brake too.

Basing actions on only the current percept can be highly limiting, and can also lead to infinite loops. Randomized actions of the right kind can help escape these infinite loops.

Model-based Reflex Agents

The agent maintains some internal state that depends on percept history and reflects at least some of the unobserved aspects of the current state. Information about how the world evolves independently from the agent is encoded into the agent. This knowledge is called a model of the world, and this agent is hence a model-based agent.

Goal-based agents

Knowing about the current state of the environment may not be enough to decide on what to do. Agents may need goal information that describes situations that are desirable. Sometimes goal-based action selection is straightforward, but in others searching and planning are required to achieve the goal. Goal-based agents are flexible because the knowledge that supports its decisions is represented explicitly and can be modified, although it is less efficient.

Utility-based agents

Goals provide a binary distinction between good and bad states. A more general performance measure should allow a comparison between world states according to exactly how good it is to the agent. An agent’s utility function is an internalisation of the performance measure. An agent chooses actions to maximise its expected utility. A utility-based agents has to model and keep track of its environment.

Learning Agents

A learning agent can be divided into four conceptual components.

- learning element

- responsible for making improvements

- performance element

- responsible for selecting external actions

- problem generator

- suggests actions that will lead to new and informative experiences

the learning element takes in feedback from the critic on how the agent is doing and determines show the performance element should be modified to do better in the future.

State representations

Atomic Representation

In an atomic representation each state of the world is indivisible, and has no internal structure. Search, game-playing, hidden Markov models and Markov decision processes all work with atomic representations.

Factored Representation

A factored representation splits up each state into a fixed set of variables or attributes, each of which can have a value.

Constraint satisfaction algorithms, propositional logic, planning, Bayesian networks and machine learning algorithms work with factored representations.

Structured Representations

Structured representations underlie relational databases and first-order logic, first-order probability models, knowledge-based learning and much of natural language understanding.

Implications

A more expressive representation can capture, at least as concisely, a everything a more expressive one can capture, plus more. On the other hand, reasoning and learning become more complex as the expressive power of the representation increases.

Problem-Solving

Problem-solving agents use atomic representations, as compared to goal-based agents, which use more advanced factored or structured representations.

The process of looking for a sequence of actions that reaches the goal is called search. A search algorithm takes a problem as input and returns a solution in the form of an action sequence.

Classical Search

This addresses observable, deterministic, and known environments where the solution is a sequence of actions.

How Search Algorithms Work

Search algorithms consider various possible action sequences. The possible action sequences start at the initial state form a search tree.

Search algorithms require a data structure to keep track of the search tree that is being constructed.

- state

- state in the state space to which the node corresponds

- parent

- the node in the search tree that generated this node

- action

- the action that was applied to the parent to generate this node

- path-cost

- the cost, traditionally denoted by

Measuring Performance

- completeness

- is the algorithm guaranteed to find a solution if it exists?

- optimality

- does the strategy find the optimal solution?

- time complexity

- how long does it take to find a solution?

- space complexity

- how much memory is required to do the search?

Uninformed Search Strategies

Breadth-first Search

The root node is expanded first, then all the successors of the root node are expanded next, then their successors, and so on.

| performance | rating |

|---|---|

| completeness | YES |

| optimal | NO |

| time complexity | |

| space complexity |

The shallowest node may not be the most optimal node.

The space used in the explored set is

In general, exponential-complexity search problems cannot be solved by uninformed methods for any but the smallest instances.

Uniform-cost Search

Uniform-cost search expands the node

This is equivalent to BFS if all step costs are qual.

| performance | rating |

|---|---|

| completeness | MAYBE |

| optimal | YES |

| time | |

| space |

Completeness is guaranteed only if the cost of every step exceeds some

small positive constant

Depth-first Search

Always expands the deepest node in the current frontier of the search tree.

| performance | rating |

|---|---|

| completeness | YES |

| optimal | NO |

| time complexity | |

| space complexity |

The time complexity of DFS may be worse than BFS:

DFS only requires storage of

Depth-limited Search

In depth-limited search, nodes at depth of pre-determined limit

| performance | rating |

|---|---|

| completeness | YES |

| optimal | NO |

| time complexity | |

| space complexity |

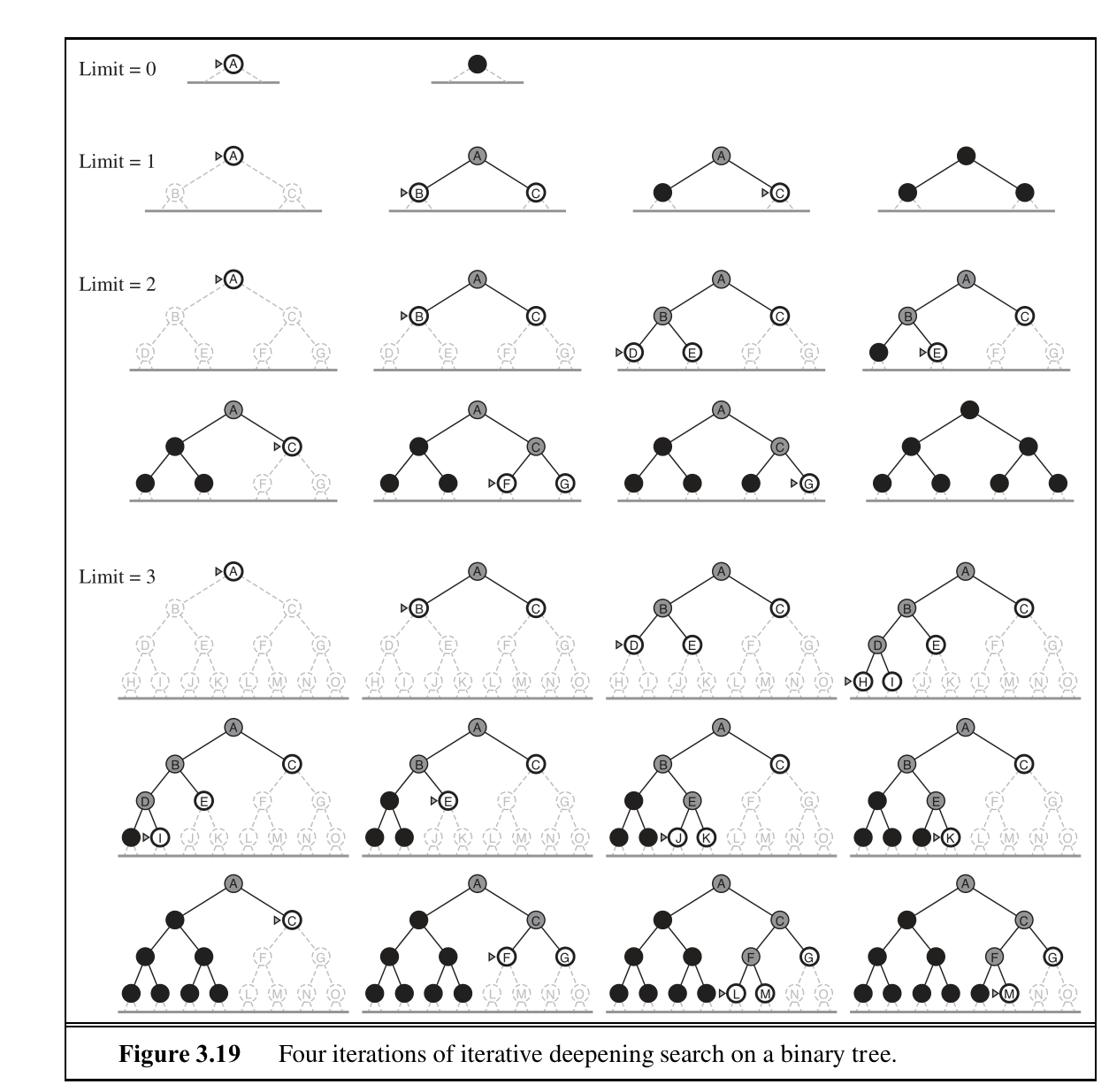

Iterative Deepening Depth-first Search

Key idea is to gradually increase the depth limit: first 0, then 1, then 2… until a goal is found.

| performance | rating |

|---|---|

| completeness | YES |

| optimal | NO (unless step cost is 1) |

| time complexity | |

| space complexity |

- BFS and IDS are complete if

- UCS is complete if

- BFS and IDS are optimal if all step costs are identical.

Bidirectional Search

Conduct two simultaneous searches – one forward from the initial

state, and the other backward from the goal. This is implemented by

replacing the goal test with a check to see whether the frontiers of

two searches intersect. This reduces the time ad space complexity to

Informed Search Strategies

Greedy best-first search

Greedy best-first search tries to expand the node that is closest to

the goal, on the grounds that this is likely to lead to a solution

quickly. It evaluates nodes by using just the heuristic function:

Greedy best-first tree search is incomplete even in a finite state

space. The graph search version is complete in finite spaces, but not

in infinite ones. The worst case time and space complexity is

A* search

It evaluates nodes by combining

Admissible heuristics are by nature optimistic because they think the cost of solving the problem is less than it actually is.

A second, slightly stronger condition is called consistency, and is

required only for applications of A* to graph search. A heuristic

A* search is complete, optimal and optimally efficient with a consistent heuristic. The latter means that no other optimal algorithm is guaranteed to expand fewer nodes than A*.

However, for most problems, the number of states within the goal contour search space is still exponential in the length of the solution.

The absolute error of a heuristic is defined as

A* keeps all generated nodes in memory, and hence it usually runs out of space long before it runs of time. Hence, it is not practical for large-scale problems.

Other memory-bounded heuristic searches include:

- iterative-deepening A* (IDA*)

- Recursive best-first search (RBFS)

- Memory-bounded A* (MA*)

- simplified MA* (SMA*)

Learning to Search Better

Each state in a metalevel state space captures the internal computational state of a program that is searching in an object-level state space. A metalevel learning algorithm can learn from experiences to avoid exploring unpromising subtrees. The goal of the learning is to minimise the total cost of problem solving, trading off computational expense and path cost.

Heuristic Functions

If for any node n

Generating Admissible Heuristics

From Relaxed Problems

Because the relaxed problem adds edges to the state space, any optimal solution in the original problem is, by definition, also a solution in the relaxed problem. Hence, the cost of an optimal solution to a relaxed problem is an admissible heuristic for the original problem. Because the derived heuristic is an exact cost for the relaxed problem, it must obey the triangle inequality and is therefore consistent.

From Subproblems: Pattern Databases

Pattern Databases store exact solution costs for every possible subproblem instance. In the case of the 8-puzzle, every possible configuration of the four tiles and the blank. Each pattern database yields an admissible heuristic, and these heuristics can be combined by taking the maximum value. Solutions to subproblems can overlap: disjoint pattern databases account for this. These work by dividing the problem in a way that each move affects only one subproblem.

From Experience

Inductive learning methods work best when supplied with features of

a state that are relevant to predicting the state’s value. A common

approach to combining features would be through a linear combination:

These heuristics satisfy the requirement that

Beyond Classical Search

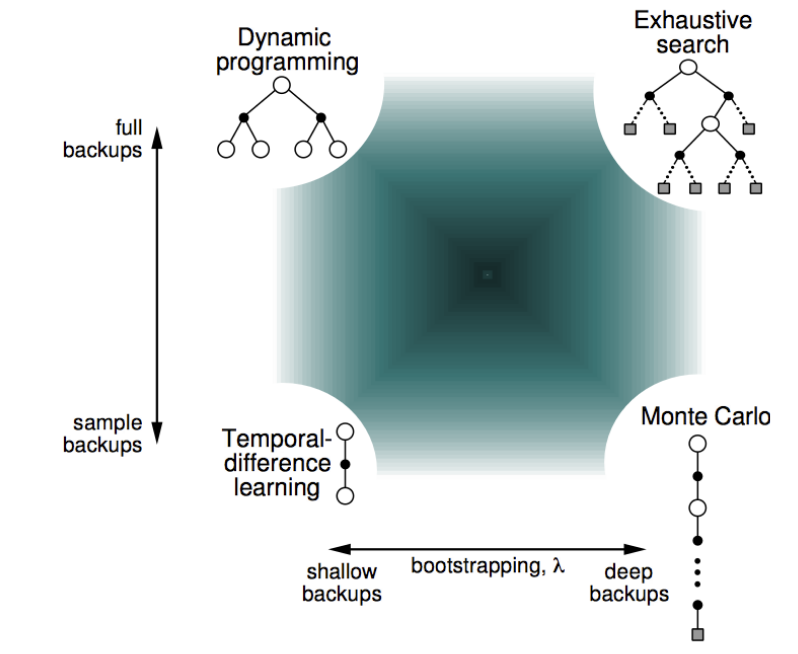

Here, we cover algorithms that perform purely local search in the state space, evaluating and modifying one or more current states rather than systematically exploring paths from an initial state. These include methods inspired by statistical physics (simulated annealing) and evolutionary biology (genetic algorithms).

If an agent cannot predict exactly what percept it will receive, then it will need to consider what to do under each contingency that its percepts may reveal.

If the path to the goal doesn’t matter, we giht consider a different class of algorithms, ones that do not worry about the paths at all. Local search algorithms operate using a single current node and generally move only to neighbours of that node. Its advantages include:

- They generally use a constant amount of memory

- They can often find reasonable solutions in large or infinite state spaces where systematic algorithms are not suitable.

Hill-climbing Search

The hill-climbing search is a loop that continually moves in the direction of increasing value.

Consider the 8-queens problem.

Local search algorithms typically use a complete-state formation. The successors of a state are all possible states generated by moving a single queen to another square in the same column.

We could use a heuristic cost function

The global minimum of this function is zero, which only occurs for

perfect solutions. Hill-climbing algorithms typically choose randomly

among the set of best successors having the lowest

Hill-climbing algorithms can get stuck for the following reasons:

- local maxima

- sequence of local maxima

- flat local maximum, or shoulder, from which progress is possible.

Variants

- stochastic hill-climbing

- chooses at random from among the uphill moves; the probability of selection can vary with the steepness of the uphill move. Usually converges more slowly, but finds better solutions.

- first-choice hill-climbing

- stochastic hill-climbing with randomly generated successors until one is generated that is better than the current state. Good when state has many successors.

- random-restart hill-climbing

- conducts hill-climbing searches from randomly generated initial states, until a goal is found. Trivially complete with probability approaching 1.

Simulated Annealing

A hill-climbing algorithm that never makes ‘downhill’ moves towards states with lower-value is guaranteed to be incomplete, because it can be stuck on a local maximum.

function SIMULATED-ANNEALING(problem, schedule)

inputs: problem, a problem

schedule, a mapping from time to 'temperature'

current ← MAKE-NODE(problem, INITIAL-STATE)

for t = 1 to ∞ do

T ← schedule(t)

if T = 0 then return current

next ← a randomly selected successor of current

𝞓E ← next.VALUE - current.VALUE

if 𝞓E > 0 then current ← next

else current ← next only with probability e^(𝞓E/T)

Local Beam Search

https://www.youtube.com/watch?v=RLWuzLLSIgw

Local beam search keeps track of

Local-beam search passes useful information between the parallel

search threads (compared to running random-restart

Stochastic local beam search chooses

Genetic Algorithms

A genetic algorithm is a variant of stochastic beam search in which successor states are generated by combining two parent states rather than by modifying a single state.

GA begins with a set of

The production of the next generation of states is rated by the objective function, or fitness function. A fitness function returns higher values for better states.

Like stochastic beam search, genetic algorithms combine an uphill tendency with random exploration and exchange of information among parallel search threads. crossover in genetic algorithms raises the level of granularity at which the search operates.

function GENETIC-ALGORITHM(population, FITNESS-FN) returns an individual

inputs: population, a set of individuals

FITNESS-FN, a function that measures the fitness of an

individual

repeat

new_population ← empty set

for i = 1 to SIZE(population) do

x ← RANDOM-SELECTION(population, FITNESS-FN)

y ← RANDOM-SELECTION(population, FITNESS-FN)

child ← REPRODUCE(x,y)

if (small random probability) then child ← MUTATE(child)

add child to new_population

population ← new_population

until some individual is fit enough, or enough has elapsed

return the best individual in population, according to FITNESS-FN

function REPRODUCE(x,y) returns an individual

inputs: x,y, parent individuals

n ← LENGTH(x); c ← random(1,n)

return APPEND(SUBSTRING(x,1,c), SUBSTRING(y, c+1, n))

Local Search in Continuous Spaces

One way to avoid continuous problems is simply to discretize the

neighbourhood of each state. Many methods attempt to use the

gradient of the landscape to find a maximum:

where

Local search methods suffer from local maxima, ridges and plateaux in continuous spaces just as much as in discrete spaces.

Searching with Non-deterministic Actions

When the environment is either partially observable or non-deterministic, percepts become useful. In a partially observable environment, every percept helps narrow down the set of possible states the agent might be in. In a non-deterministic environment, percepts tell the agent which of the possible outcomes of its actions has actually occurred. Future percepts cannot be determined in advance, and the agent’s future actions will depend on those future percepts. The solution to a problem is not a sequence but a contingency plan

The solutions for no-deterministic problems can contain nested if-then-else statements, meaning they are trees and not sequences.

AND-OR search trees

A solution for an AND-OR search problem is a subtree that:

- includes every outcome branch leaf

- specifies one action at each of its OR nodes

- includes every outcome branch at each of its AND nodes

function AND-OR-GRAPH-SEARCH(problem) returns a conditional plan, or failure

OR-SEARCH(problem, INITIAL-STATE, problem, [])

function OR-SEARCH(state,problem,path) returns a conditional plan, or failure

if problem, GOAL-TEST(state) then return the empty plan

if state is on path then return failure

for each action in problem, ACTIONS(state) do

plan ← AND-SEARCH(RESULTS(state,action), problem, [state | path])

if plan ≠ failure then return [action | plan]

return failure

function AND-SEARCH(states,problem,path) returns a conditional plan, or failure

for each s_i in states do

plan_i ← OR-SEARCH(s_i,problem,path)

if plan_i = failure then return failure

return [if s_1 then plan_1 else if s_2 then plan_2 ...]

(stop at AIMA 4.4)

Adversarial Search

Competitive environments, in which the agent’s goals are in conflict, give rise to adversarial search problems.

Game theory views any multi-agent environment as a game, provied that the impact of each agent on the others is significant.

Games often have large branching factors, and require making some decision even before computing the optimal decision.

Pruning allows us to ignore portions of the search tree that make no difference to the final choice, and heuristic evaluation functions allow us to approximate the true utility of a state without doing a complete search.

A game can be formally defined as a search problem with the following elements:

- the initial state, which specifies how the game is set up at the start

- Defines which player has the move in a state

- Returns the set of legal moves in a state

- The transition model, which defines the result of a move

- Terminal test, which is true when the game is over, and false otherwise.

- A utility function defines the numeric value for a

game that ends in terminal state

The initial state,

Optimal Strategy

The optimal strategy can be determined from the minimax value of

each node (

Minimax uses utility function on leaf nodes, backing up through the tree, setting the node value to be the minimum of the children.

Alpha-Beta Pruning

Eliminate parts of the search tree that do not affect decision.

Classical Planning

Problem-solving agents that deal with atomic representations of states require good domain-specific heuristics to perform well. The hybrid propositional logical agent can find plans without domain-specific heuristics because it uses domain-independent heuristics based on the logical structure of the problem. However, it relies on ground propositional inference, and suffers when there are large numbers of actions and states.

Planning Domain Definition Language (PDDL) was created in response to these deficiencies.

Each state is represented as a conjunction of fluents that are ground, functionless atoms. Database semantics is used, which involves:

- closed-world assumption

- all fluents not mentioned are false

- unique names assumption

- fluents with different names are distinct

State representations are carefully designed so that they can be manipulated by set operations or logical inference.

Actions are defined in terms of the preconditions and effects. Preconditions and effects are described in terms of a conjunction of literals.

Complexities of classical planning

PlanSAT is the question of whether there exists any plan that solves a planning problem. Bounded PlanSAT asks whether there is a solution of length k or less.

While the number of states is finite, adding function symbols make them infinite, making these problems semi-decidable at best. Certain restrictions can reduce the questions into a P class problem.

However, most agents would not be asked to derive plans for worst-case problem instances. For many problem domains, bounded PlanSAT is NP-complete, while PlanSAT is in P.

State-space search for planning

The first approach is forward (propogation) state-space search, which searches forward from the initial state. However, it is inefficient, for the following reasons:

- It tends to explore irrelevant actions

- Planning problems tend to have large state spaces, and relatively small problems will be an issue without a good heuristic

It turn out that good domain-independent heuristics can be derived for forward search, which makes it feasible.

Alternatively, we can do a backward (regression) state-space search, which looks for actions that can lead to the goal. Unlike forward search, backward search only explores relevant actions, hence has a low branching factor. However, backward search deals with sets, which are make it harder to derive good domain-independent heuristics.

Heuristics for planning

Framing the search problem as a graph where the nodes are states and the edges are actions. We can think of a number of ways to relax the problem, generating admissible heuristics:

- Add more edges to the graph, making it easier to find a path

The ignore preconditions heuristic drops all preconditions from actions, and every action becomes applicable in every state. We count the number of actions required such that the union of the action’s effects satisfy the goal. This is called the set-cover problem, which is unfortunately NP-hard. We can also ignore selected preconditions of actions.

The ignore delete list heuristic, drops all negative literals in goals and preconditions. This way, an action cannot undo progress towards the goal, and each action taken would monotonically progress towards it.

- Grouping multiple nodes together, shrinking the size of the graph

We can reduce the number of states by forming a state abstraction – a many-to-one mapping from states in the ground representation of the problem to the abstract representation. For example, one can ignore some fluents.

The key idea in defining heuristics is decomposition: diving a problem into parts. Subgoal independence is the assumption that the cost of solving a conjunction of subgoals is approximated by the sum of the costs of solving a subgoal independently.

Other classical planning approaches

We can translate a problem description in PDDL to a form that can be processed by SATPlan. The steps are below:

- Propositionalize the actions: replace each action schema with a set of ground actions formed by substituting constants for each of the variables. These ground actions are not part of the translation, but will be used in subsequent steps.

- Define the initial state: assert

- Propositionalize the goal: the goal becomes a disjunction over all of its ground instances, where variables are replaced by constants.

- Add successor-state axioms: For each fluent

Where

- Add precondition axioms: for each ground action

- Add action exclusion axioms: say that exactly one ground action can occur at each step.

function SATPLAN(init, transition, goal, Tmax) returns solution or failure

""inputs: init, transition, goal constitute the problem

description

Tmax: upper limit for plan length""

for t = 0 to Tmax do

cnf <- translate-to-sat(init, transition, goal, t)

model <- SATSolver(cnf)

if model is not null then

return extract-solution(model)

return failure

Decision Theory

Reference: AIMA Chapter 16

Utility theory combines with probability theory to yield a decision-making agent, that can make rational decisions based on what it believes and what it wants. This is opposed to goal-based agents, that make binary decisions between good and bad states.

Combining beliefs and desires under uncertainty

Under nondeterministic, partially observable environments, the result

of an action cannot be represented by a single number.

The agent’s preferences are captured by a utility function

The principle of maximum expected utility (MEU) says that a rational agent should choose the action that maximizes the expected utility. If an agent acts to maximise a utility function that correctly reflects all possible outcomes, then it will achieve the highest possible performance score (averaged across all environments).

Axioms of Utility Theory

To show why MEU is a rational choice, we turn to utility theory. First, we define notation:

The axioms of utility theory are:

- orderability

- Exactly one of

- transitivity

- continuity

- substitutability

- monotonicity

- Decomposability

von Neumann and Morgenstern showed that following these axioms, it can be shown that.

- Utility functions exist.

- The utility of a lottery, can be represented as the sum of

probability of each outcome, multiplied by the utility of that

outcome:

Utility functions are not unique: in fact, that are not changed with

affine transformations, of the form

Utility assessment and utility scales

To build a decision-theoretic system, we must first work out what the agent’s utility function is. This process is called preference elicitation, which involves presenting choices to the agent and using the observed preferences to pin down the underlying utility function.

We have established above that utility functions are not unique, but

it helps to have a normalized utility. Normalized utilities fix the

utility of a “best possible prize” at

Given this utility scale, we can assess the utility of a prize

Multi-attribute utility functions

When outcomes are characterized by two or more attributes, we need a multi-attribute utility function.

Dominance

We say that there is strict dominance of

If

Preference structure and multi-attribute utility

Multi-attribute utility theory is based on the supposition that preferences of typical agents have structure. This alleviates the difficulty in expressing a utility function with many attributes and possible distinct values.

One simple regularity in preference structures is called preference

independence. Two attributes

If all attributes are mutually preferentially independent, then an agent’s preference can be described by maximising the function:

An example is:

Under uncertainty, the mathematics gets a bit more complicated.

Utility independence extends preference independence to cover

lotteries: a set of attributes

Mutual utility independence implies that the agent’s behaviour can be

described using a multiplicative utility function. If we denote

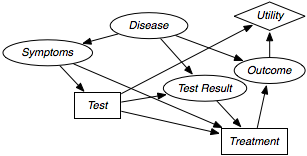

Decision Networks

Decision networks extend Bayesian networks with nodes for actions and utilities. An example of a decision network is below:

The notation is as such:

- chance nodes (oval)

- these represent random variables

- decision nodes (rectangles)

- these represent points where the decision maker has a choice of actions.

- utility nodes (diamonds)

- represent the agent’s utility function

A simplified form is used in many cases. The notation remains identical, but the chance nodes describing the outcome state are omitted. Rather than representing a utility function on outcome states, the utility node represents the expected utility associated with each action. This node is associated with an action-utility function (also known as Q-function).

Information Value Theory

The value of a given piece of information is defined to be the difference in expected value between the best action before and after information is obtained. This is one of the most important parts of decision-making: knowing what information to obtain. Information has value to the extent that it is likely to cause a change of plan and to the extent that the new plan is significantly better than the old plan.

Mathematically, the value of perfect information (VPI) is defined as:

This is obtained by considering the best action (maximum expected utility) before and after obtaining the information, and averaging it across all possible values for the new information, using our current beliefs of its value.

-

Properties of the value of information

First, the expected value of information is non-negative.

The theorem is about the expected value, and not the real value. This means that information can sometimes lead to a plan that is harmful.

It is important to note that VPI is dependent on the current state of information. VPI is not additive in general:

VPI is order independent. That is:

-

Information Gathering Agents

we can implement a myopic information-gathering agent, by using the VPI formula shortsightedly.

function INFORMATION-GATHERING-AGENT(percept) returns an action persistent: D, a decision network integrate percept into D j <- the value that maximises VPI(E_j) / Cost(E_j) if VPI(E_j) > Cost(E_j) return REQUEST(E_j) else return the best action from DIf we know the associated cost of observing evidence, it simply retrieves the evidence if the cost of observing it is less than the value it provides.

RANDOM

Simon’s Ant

Simon, noble prize in economics

The complexity of the behaviour is the manifestation of the complexity of the environment and not the complexity of the program.

REFILE

Papers

Improving Policy Gradient by Exploring Under-Appreciated Rewards

The REINFORCE algorithm minimizes the KL divergence between

Hence, a entropy regularization loss is added to encourage exploration.

However, this exploration is undirected, and requires a small regularization coefficient to prevent too much random exploration.

Optimizing in the mean-seeking direction of the KL divergence is to learn a policy by following:

In RL, the reward landscape is unknown, hence sampling from

where

One can view the weights as the discrepancy between scaled rewards

In UREX, both the RAML objective and the expected reward objective is combined and jointly maximized.